What is an AI model anyway?

Oct 12, 2021 by Andreas Syrén

An AI model is what is referred to as a function approximation. The concept of function approximation, as the name might allude to, is the combination of two different ideas, mathematical functions and mathematical approximation.

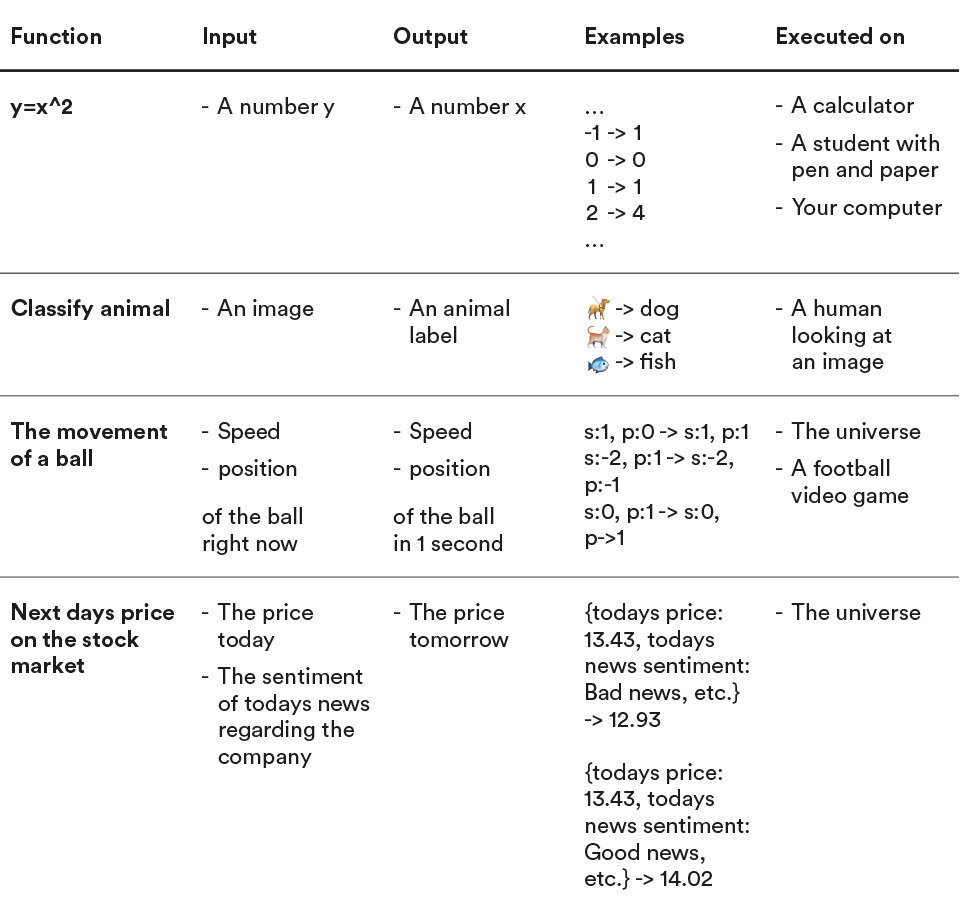

A mathematical function is the abstract notion of taking some input and mapping each input to some output. Consider these examples:

The neat thing about functions, as exemplified in the table above, is that they provide a way to reason about real life processes in a structured framework. I can easily identify some process I am interested in and define it as something that takes some input and mapping each input to some output. Importantly we don’t need to know HOW the function works. We just need the input and output to define it.

In the context of this, it becomes clear why function approximation is such a powerful idea. In the examples above only the first two functions are simple enough that we know how to compute them with computer code (x^2 is for example evaluated as 3^2=3*3=3+3+3=9). Ideally I would like to know exactly what each one of these functions are doing. If I did I could:

- Sort my folder of 1’000’000 mixed animal pictures automatically

- Build a robotic goal keeper

- Predict tomorrows stock prices and become rich

Sadly, I don’t know this. What is my brain doing when determining if an image contains a cat? Can I program a computer to do the same thing? What exactly is it about the collection of pixels that make up a picture that makes it an image of a cat? (Answer: Who knows? What kind of question is that even?)

This doesn’t sound very promising, but we do know inputs and outputs of the function, so it would be easy enough to provide a bunch of examples. Those examples don’t really tell you (as a human) a lot about what exactly makes the pixels of an image more cat-like. Can we find a way to approximate what the function is doing by using only the sample inputs and outputs? Yes! This is what a machine learning AI model does! When a machine learning model is using a bunch of inputs and outputs to find this approximation it is referred to as training an AI model. To do this, you simply show the model a bunch of images of cats, and crucially a bunch of images that are not cats so that the model can compare them (if all images are cats the answer is always “yes”. Since models are somewhat lazy it would just learn to ignore the image and just say “yes“ all the time). Then you let an optimization algorithm called gradient descent try to find the best approximation.

This is, on a high level, exactly what a deep learning model does, and what happens when you train it. You create it by defining the function, or in other words by picking a defined input and output. Then you try to approximate what the function is doing by training the model. So “training a model” is simply another way of saying that the computer is trying to find this approximation. If the approximation ends up being good, I can use the model to

- Sort my folder of 1’000’000 mixed animal pictures automatically

- Build a robotic goal keeper

- Predict tomorrows stock prices and become rich

In theory, this is amazing! In practice there are a few more details to consider. Since the AI model only finds approximations, the model is not perfect. It tries to do its best but it is not the same as the real function. It is just an approximation.

This is why it is very important to evaluate the performance of the model on new samples. What you would typically do is to split the samples (data) into two partitions. One training partition for training the model (duh!) and one testing partition to evaluate whether the model will work once you release it into the world. It is crucial that the test data is different from the training data. Otherwise you won’t be able to tell if the model decided to cheat and has simply memorised the correct output for all training samples.

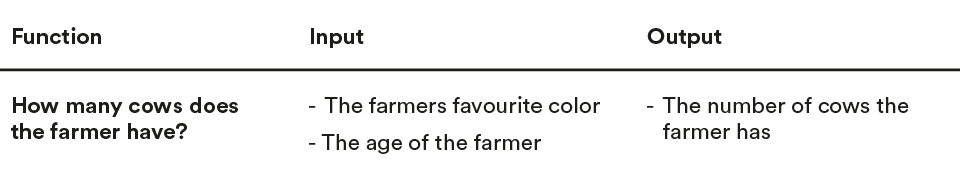

Another problem encountered sometimes when building AI models in a business setting is that the function defined may not even exist! 😱Consider this nasty function definition, for example:

Clearly this function is complete nonsense – color and age has nothing to do with cows. While in this example it is clear why a machine learning model is going to fail, in real business use cases this problem might be a lot harder to spot. When this happens it can sometimes be easy to miss. The model will, in its determination to do the best job possible, find the least bad approximation to a function that actually exists. In the example about the farmer, the model would probably just find the most common number of cows in the training data (say, 45 for the sake of argument) and just always guess that number. It may not be the function you were hoping for, but number_of_cows(color, age)=45 (always) at least exists and gets it right more often than any other number.

Close Window

Automation Hero will track how you use the emails (e.g., at what time you open which part of the emails) sent by Automation Hero. If you have provided a separate declaration of consent that cookies for tracking your usage of the website and/or apps may be placed on your device, Automation Hero will also connect the information about your use of Automation Hero’s websites and apps (e.g., which information you open) collected by the tracking cookie to such information in so far as possible. Automation Hero will analyze such information, to identify your interests and preferences and to communicate with you in a more personalized and effective way, e.g. by providing information that you are likely interested in, like information on new technologies or products of the Automation Hero group that are likely relevant to you.